In the 1960s, the late British painter and engineer Harold Cohen faced a dilemma: what makes an image? A relatively uncomplicated question on its surface, to which one might answer that the presence of marks, or simply something to stare at and process, suffices. Finding these answers unsatisfactory, Cohen took the next rational course of action. He ditched his paintbrushes, relocated to Southern California, and began studying an exciting new field at the University of California, San Diego: artificial intelligence, or AI.

“When I first met a computer, I had no idea it could have anything to do with art,” Cohen reflected in 1980. Nevertheless, he was intrigued by what he saw—or rather, what he couldn’t see. Realizing the potential for AI technology to solve his initial dilemma, Cohen began developing a painting system that could establish what minimum requirement would distinguish a set of marks from an image by simulating what distinguishes humans from machines: intentionality. During his partnership with the Stanford Artificial Intelligence Lab (SAIL) in the early 1970s, Cohen programmed AARON; a computer system designed to create images autonomously, and convincingly simulate human intent. Though described by the artist himself as “crude”—prior to 1995, AARON’s simulations were produced via small “turtles,” motorized robots equipped with an ink canister and pen—Cohen’s work marked a critical milestone in the history of AI generated imagery.

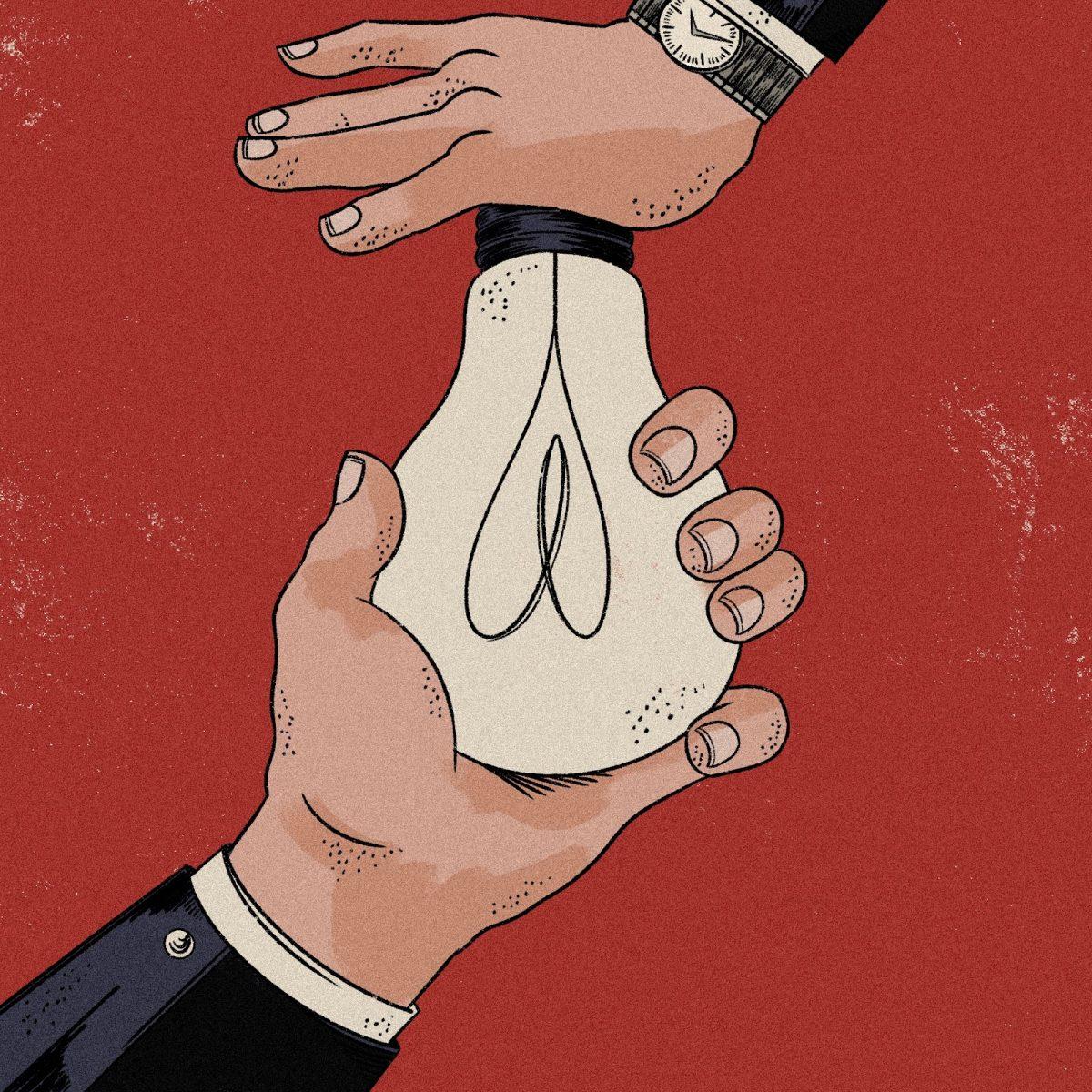

Cohen’s objective to program a system capable of mimicking human behavior has been far surpassed by modern text-to-image models like DALL-E 2 and Stable Diffusion. With time and inevitable progress, distinguishing between artwork created by humans and that of deep learning networks programmed to simulate brain processing will be challenging, if not impossible. Meanwhile, the definitions of generator and originator become increasingly blurred. With these certainties, new issues arise: the ethicality of deep learning algorithms at a fundamental level, and whether professional artists should be expected to compete with abiotic systems that, in a sense, rely on plagiarism to function.

Text-to-image models have erupted in popularity recently, with their—admittedly—fascinating ability to render prompts in nearly any style within seconds. You’ll likely recall the entertaining DALL-E 2 samples flooding your social media late last year; users tasking the algorithm with “raccoons playing pickleball,” or “sinking of the Titanic in a Pixar film,” and posting the results. Though varied in accuracy, the images were nevertheless impressive.

But the way in which these models function, how they’re able to generate such stunning and outlandish images, is where the problem lies. AI algorithms—like DALL-E and its successor, DALL-E 2—are trained on massive data sets scraped from the web. In the case of artwork generators, artificial neural networks are fed millions of images with associated text descriptions, allowing the algorithm to process information similarly to a human brain; a concept called “deep learning.” Most content fed to these algorithms, and what becomes of it, is not at any point consented to by the originator of said content. Even if it’s copyrighted.

A lawsuit filed earlier this year seeks to hold three AI platforms and associates—Stability AI, DeviantArt, and Midjourney—accountable for this. All three companies employ the text-to-image model Stable Diffusion, developed by Stability AI. The lawsuit itself was filed by artists Kelly McKernan, Karla Ortiz, and “Sarah’s Scribbles” author Sarah Andersen. Attorney Matthew Butterick declares Stable Diffusion a “collage tool,” trained on millions of unauthorized, copyrighted images; including work by the plaintiffs, detected in LAION Aesthetics (the data set used to train the Stable Diffusion model). While the suit acknowledges the general contrast between images fed to an algorithm and the result, they argue the use of these original images without artists’ consent is beyond fair use. Getty Images has also since filed a lawsuit against Stability AI, accusing the company of copyright infringement by ignoring “long-standing legal protections in pursuit of their stand-alone commercial interests.” As of now, neither suit has been resolved.

Naturally, this is alarming to artists whose social media and public portfolios could be vacuumed into haphazard data sets such as LAION Aesthetics; and repurposed by whichever AI model obtained their work. Take Deb Lee, a published graphic novelist and illustrator whose recognizable style was reproduced into several images by a user of an unknown AI platform. Additionally, many text-to-image models allow the commercial-use of their patrons’ “creations,” so long as the AI platform is credited. Although few would argue that professional artists have ever had it easy, their livelihoods stand on shaky ground in the face of modern AI models.

Portland muralist and illustrator—and “all-around art person”—Molly Mendoza says that text-to-image models, even in their primitive stages, have always unsettled them. “I can’t say that I was up-in-arms yet about how ethical it was,” she clarifies. “As the technology got more advanced, and people started specifically scrubbing for artists’ work; that’s when it got scary.” What’s especially disturbing to her is the lack of concern among not only “AI bros,” but her peers. “People say they’re just having fun with this, but I don’t think they realize they’re feeding a bigger beast,” they explain. While the harm caused by this technology isn’t obvious to users, these models rely on stolen content to function. Afterall, gaining permission to access every artists’ digital portfolio only for their life’s work to be recycled into the limitless concoctions users may prompt an engine with, with no compensation, would be trivial. This is accompanied by the terrifying added benefit of these models’ capacity to identify key details of a style detected in their data set, and emulate that in future generated images when prompted; as was the case for Deb Lee. In Mendoza’s view, the outcome of AI companies’ apathy toward the value of an artist’s work and identity is bleak. “If they can generate a cover for an editorial, or a kids’ book—which they have—I’m out of a job; and so are tons of my peers,” she says. “We’re no longer needed.”

Despite the competition disadvantage and even harsher labor market these systems may create for working artists, many AI enthusiasts maintain an ends-justify-the-means rationale. Stable Diffusion Frivolous—a website created to combat “misinformation” surrounding Stability AI’s text-to-image model—argues that taking “a byte or so from a work, to empower countless millions of people to create works not even resembling any input,” ought to be considered fair use (fragments of copyrighted material the public may use under certain circumstances). While true that very little of any one original image is detectable in a final product of AI platforms, their capacity to mimic styles identified within similar visual information—especially those advertising “style transfer” features like NightCafe, another Stable Diffusion generator—can be exploited by users to “create” work resembling the input; thus swiping artists’ identities as creators, and crowding the market with plagiarized images at a rate no human alone could possibly compete with. To non-artists, I suppose this could be described as “empowerment.”

The use of vague terms, such as “empower,” are frequently championed as the advantages of AI image generators. One particularly overused claim in this context is accessibility; Stable Diffusion Frivolous later explains that users of text-to-image models are simply “enjoying their ability to realize their newfound artistic dreams with greater ease than before,” the closest I’ve come to proof that this is all fueled by individuals who were encouraged as children to pursue STEM based on their crayon portraits. Broad declarations of how open-source AI will “enable billions to be more creative, happy, and communicative,” and “bring the gift of creativity to all,” are common. (Both of these statements were made by Stability AI CEO, Emad Mostaque, upon the release of Stable Diffusion). Although great marketing proclamations, it’s difficult to ignore the hollowness of their usage. “It’s taking a word [accessibility] that is specifically used to broaden the way we show up for each other, and using it as jargon to push an agenda,” Mendoza explains. The agenda ultimately being to “avoid paying an artist to create.” In her view, these fantastic claims are nothing more than a disguise for furthering the commodification of art.

Of course, not all artists are necessarily pessimistic about what lies ahead for them. Speaking with artists at the Alberta Street Gallery, the attitude surrounding AI was cautious, yet resilient. Michelle Purvis, fine artist and manager of the gallery, describes her initial encounter with text-to-image models as experiencing the stages of grief. “At first I was angry, but by the end I accepted it,” she explains. While she admits the technology is intimidating, she doesn’t disregard its uses as a tool or view it as potential competition. “Technology has always altered artists’ way of doing things, and you have to adapt,” she says. Purvis personally rejects the idea that AI generated images hold artistic value, arguing that typing alone doesn’t constitute the physical and emotional connection, and involved decision-making that fuels artistic expression.

Generally, the response from the gallery artists to their work potentially being scraped and repurposed was met with expressions of frustrated familiarity. “It makes me want to take all of my stuff offline,” Purvis admits. However, she feels the risks of having her work taken without her consent is simply part of the deal when you upload your efforts to social media. “People steal stuff off Facebook and Instagram all the time,” interjects fiber artist and gallery-regular, Kim Tepe. “It’s not a new problem, just expanded.” It’s true that artists with a decent presence online have had their work ripped-off prior to the emergence of text-to-image models. Creators’ work are plastered on tote bags and plagiarized prints by fractious e-commerce companies constantly, likely without them ever knowing it. “But,” Mendoza asks, “can we say [data scraping] sucks, too?” While art theft is inevitable regardless of the times we’re in, this shouldn’t stifle artists’ voicing of discomfort or irritation around a novelty that is inherently discomforting and irritating. Especially to those within fields it threatens to make obsolete.

David Friedman, who was displaying his intricate paper cutting work at the gallery, was uniquely candid about his worries surrounding text-to-image models, and ever-improving AI. “Honestly, I think it’s uncontrollable,” he comments. “It’s like Pandora’s box.” Having tried an image generator for himself, he admits that the technology is fascinating, if not slightly scary. “This is a machine that takes pixels from other art, and combines it into some [fusion] of elseness,” Friedman explains.

While impressive, these resulting fusions of elseness still complicate the lives of professional artists going forward. Not only by scraping works and blurring the legal definitions of ownership, or devaluing and poaching artistic style in the interest of cheap users; but also by plaguing the future with “what-ifs.” What if consumers favor AI generated imagery, or can’t differentiate between that and the original; what if AI models become private, and corporations begin employing abiotic labor that doesn’t tire, pay rent, or unionize; what if art loses all meaning? “Everybody has the ability to create something that would not exist without them, and that’s never going away,” Friedman says. “In that sense, I feel calm about the future of art.” Purvis has a similarly hopeful view, arguing that those who value art will continue to show up for them. “We all want human connection, to be here, and see and touch the physical thing,” she claims. “Art is not going away.”

While there’s no telling the ultimate fate of AI companies and those they impact, less optimistic artists have begun to invent methods of directing it. Aside from legal action, creators are developing tools to disrupt or even prevent their work from being recognized by algorithms. A recently released program called Glaze allows creators to upset an algorithm’s ability to replicate attributes of their style across new images, with no visible changes to the original work. Protestors on ArtStation, a platform flooded with AI generated content, are posting work overlaid with “AI” in a stenciled-font, crossed out in red.

AI has advanced far beyond what Cohen and his contemporaries could ever have predicted when the field was young. He likely didn’t anticipate a time when it would be used against artists like himself. Unconvinced of her livelihood’s resilience, Mendoza says they’ll “continue to be a stick in the mud” about AI. Despite the uphill battle, the stakes at hand are surely worth the effort.