If you’re a teenager, you may find yourself, like many of your peers, laying in bed scrolling mindlessly and allowing social media to pull your thoughts away from all other parts of your life. Just one click and you find a post with the caption, “when the worst person you’ve ever met reposts a video about how everyone else is the problem.” Scroll through the comments and everyone is in agreement: “those people are so toxic,” “those people are so oblivious,” “those people…”

Suddenly, scrolling doesn’t feel as innocent as watching a compilation of videos about puppies, and instead, you become increasingly aware of the pool of dread building in your stomach. Because, while social media can be used as an escape from the real world, there is no way to truly keep reality off the internet. The algorithms in place on these apps don’t only mean wasted hours scrolling to avoid productivity; they also keep people stuck. Stuck in negative mindsets. Stuck in negative emotions. Stuck in negative behavior.

As phones have become part of daily life, and teens struggle to balance their time online with real life, it brings up scenarios like the aforementioned post — moments when what people perceive in their real life clash with what they are viewing online. Social media, obviously, is not the cause of all negative feelings, mental health issues, or conflict, however it’s shown to exacerbate these emotions — more so than how they would have been without its interference.

Apps like TikTok and Instagram are formed with algorithms used to cater to a user’s interests. Created to rope viewers in to bring more profit and time spent on these platforms, these algorithms can create “echo chambers,” a term that references environments which reinforce the same perspectives and preexisting beliefs, without holding room for varying voices or debate.

Online communities that are formed based on shared interests through these algorithms can be as innocent as sharing book recommendations or baking recipes. However, when people are spending hours and hours every week glued to their phones, these communities can become harmful since someone is only hearing one perspective. In an effort to keep the consumer linked to an app, these platforms aim to match all users with posts that fit within their shown interests, perspectives, and values. And soon, someone is only getting one viewpoint on controversial discourse, current events, or even just relatable experiences.

Sharing common experiences has become a key part of many social media platforms that are catered to teens, as more and more influencers become popular from “relatable” content. Even though on the surface it’s completely innocent to give a like or two to posts referencing your current struggles, this is not taken lightly in these algorithms. Suddenly, every video in your algorithm is referencing deep depressive episodes or conflicts like the one you’ve been having with your friend. And 15 second videos layered with depressing music at two times speed constantly remind you every time you’re on your phone just how relatable it is that your mental health issues will never leave or that you will continue to lose friendships you thought were going to last forever.

Algorithms based on pushing relatable content may not initially be the cause of many of these struggles, but the repetition of these posts all lack the nuance it takes to truly tackle these problems. Anna York, a teacher of Advanced Placement Psychology here at Franklin, describes how because of this, “people can get stuck with this concept of rumination where you get stuck in these negative feelings. And it’s just this cycle [of] when you’re in a negative feeling … you can stay in that.”

So what? Someone is a little more sad after watching a sad video layered with a sad quote layered with a sad song layered with a sad caption layered with sad comments? These algorithms don’t only impact a person’s mental health: Staying stuck in negative mindsets that are exacerbated via social media can impact everything and everyone around the user. As one remains in these algorithms that perpetuate the same mindset and behavior, this leaves no room for accountability or growth.

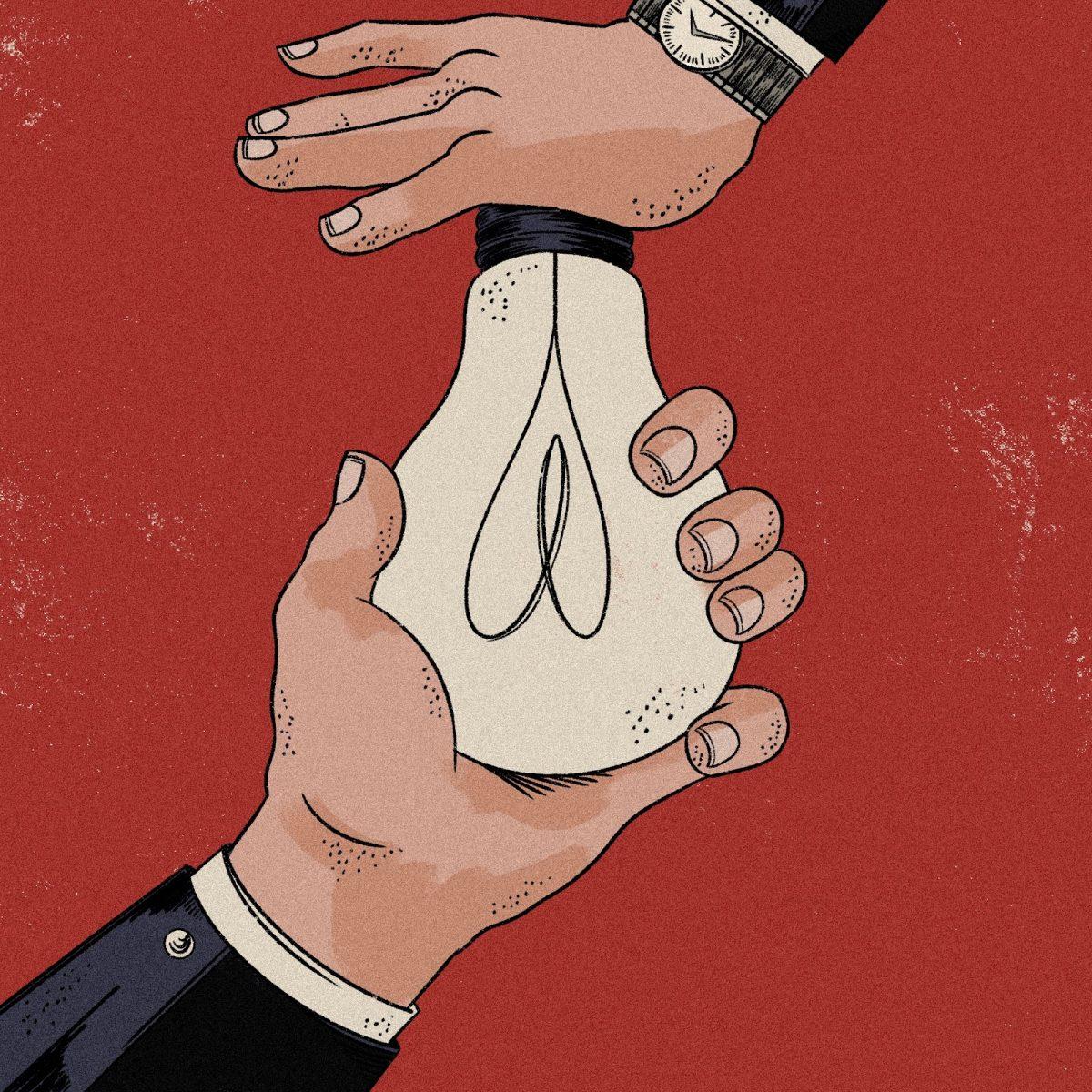

But really, if someone, as these posts declare, was “the worst person they knew,” how could they be so sure of themselves posting and reposting countless videos painting themselves as the victim? Is this person really that oblivious? Maybe they are, or maybe they are being fed the same variation of an algorithm that everyone is being fed. One that validates their emotions and experiences, but simultaneously dismisses and ignores any of the varying perspectives that may give them nuance in their mindset surrounding their actions.

York adds onto this idea, indicating that people inherently want to be surrounded by the environments these algorithms create. “We want to find things that validate what we think, and so it’s very easy to find confirmation bias on the internet today, just because there’s so much information that you can find anything to agree with.”

Confirmation bias, which is the tendency to only seek out perspectives that agree with your own, is a key aspect of how these echo chambers are able to remain. However, there can be lasting impacts of only seeking out perspectives that agree with your own. As York describes, “You find groups on social media and other places where you can just find other people that feel the way that you do and that’s all you interact with. And that gives you a very biased perspective of what’s going on.”

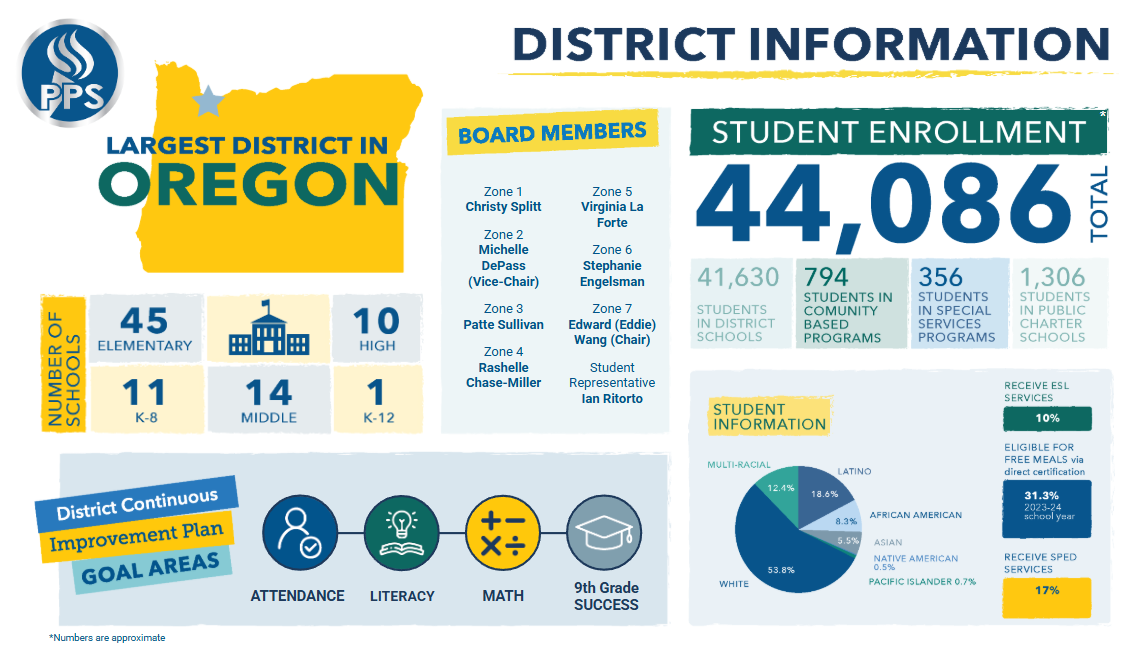

Even though taking accountability is a big part of learning from negative situations and conflict in one’s life, validation for one’s experiences and feelings is still a huge aspect of growth. Myles Worthy is a confidential advocate in Portland Public Schools (PPS) who works to listen and support teens through anything they are going through. Worthy emphasizes how the first steps towards growing past a situation can lie in validation as “oftentimes, when we talk about accountability, it gets confused with punishment, and that makes people less likely to be held accountable or even want to talk about accountability.” When someone is coming to you crying they don’t want to be told they are the “problem,” they want to be heard.

Having support from people in your life or reaching out to support systems — such as the confidential advocates here in PPS — are very important steps towards growth. What makes real-life support so much healthier for growth than online validation is the added nuance. You’re talking to a person who can converse with you with more of an understanding of your struggles than a random person on the internet can via a relatable post or comment thread.

Additionally, it is important to consider the validation and support from support systems during hard situations as a singular perspective. When reaching out, most people can expect their friend or parents to only give advice and validation from their perspective; they can’t speak for thousands. This differs, in a way, from the posts pushed by these algorithms that can hold increased weight in users’ minds when a post becomes not just a post, but one holding the weight of the millions of people who may have already liked it, related to it, and validated that perspective for you again. Suddenly, a comment isn’t just a comment but one of the thousands that say “exactly what you were thinking.”

Growth and accountability do not have to be isolated from validation for someone’s experiences, even if they are in the wrong — even if they are “the worst person you’ve ever met.” It becomes an issue when the complex and well-rounded validation given from a conversation in real life is replaced with the cheap validation of the posts, comments, and likes found in these echo chambers.